How to Speed Up Amazon ECS Container Deployments

Amazon Elastic Container Service (Amazon ECS) is a fully managed container orchestration service that makes it easy for you to deploy, manage and scale containerized applications. Although ECS is a managed service and hides many complexities under the hood, there have been many cases where startups have not been able to take full advantage of ECS due to slow deployments. In this article, we will share some tips to improve deployment speed on ECS. We will try to cover both EC2 tasks and Fargate tasks.

Morgan Perry

April 20, 2022 · 7 min read.jpg?ixlib=gatsbyFP&auto=compress%2Cformat&fit=max)

#Why deployment on Amazon ECS become slow?

An average web application usually does not take very long to set up and run on ECS. You download the project from a repository, install its dependencies, build the source code, and launch it. So what is causing delays in the deployments? Here are a couple of reasons:

- The EC2 instances/Fargate tasks that the ECS cluster manages, need to re-download the latest docker image from the ECR repository. These images vary in size, depending on how large the application is, and its many dependencies. Needless to say, the bigger the image is, the more time it will take. After finishing the download, the task instances need to get the Docker image up and running, which takes additional time.

- Whenever a new task instance is initialized, it needs to pass a set of health checks in order to be marked as healthy and fully deployed. During this period, ECS cluster will keep running the old task instances in parallel to the newly created ones until the new ones have passed the health checks to prevent downtime. How quickly the new task instances get labeled as healthy and how fast the new task instances replace the old ones, depends on the configuration of your health checks. It also depends on how many free resources you have available on the ECS cluster.�

- Shutting down tasks can also take time. When we run an ECS Service Update, a SIGTERM signal is sent to all running containers. You need to make sure to drain any remaining resources before completely shutting down the service.

Following are some tips that can help you speed up your Amazon ECS deployments.

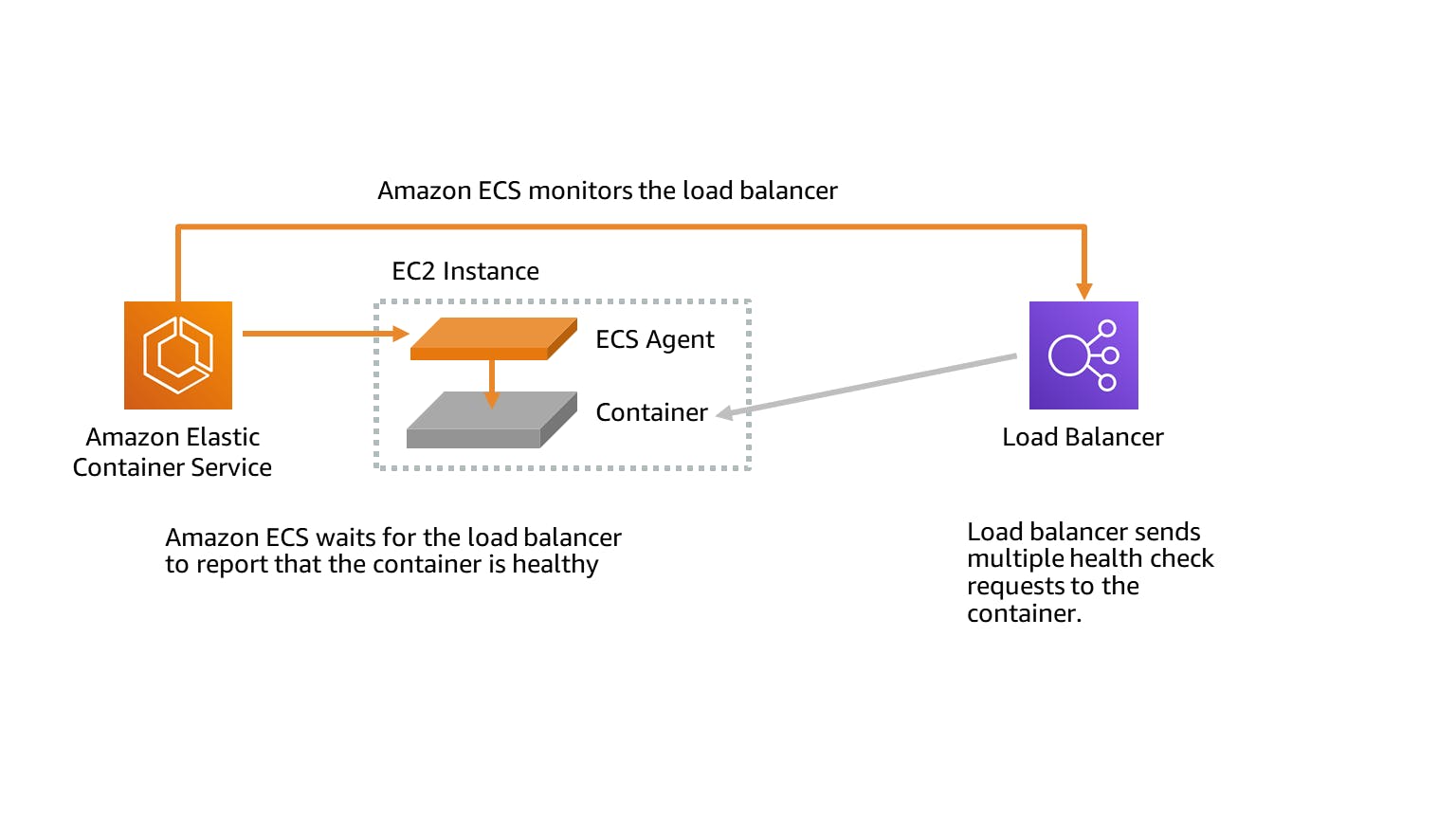

#Tune your load balancer health check parameters

As you can see in the below diagram, the load balancer periodically sends health checks to the Amazon ECS container. The Amazon ECS agent monitors and waits for the load balancer to report on the containers being healthy. It does this before it considers the container to be in a healthy status.

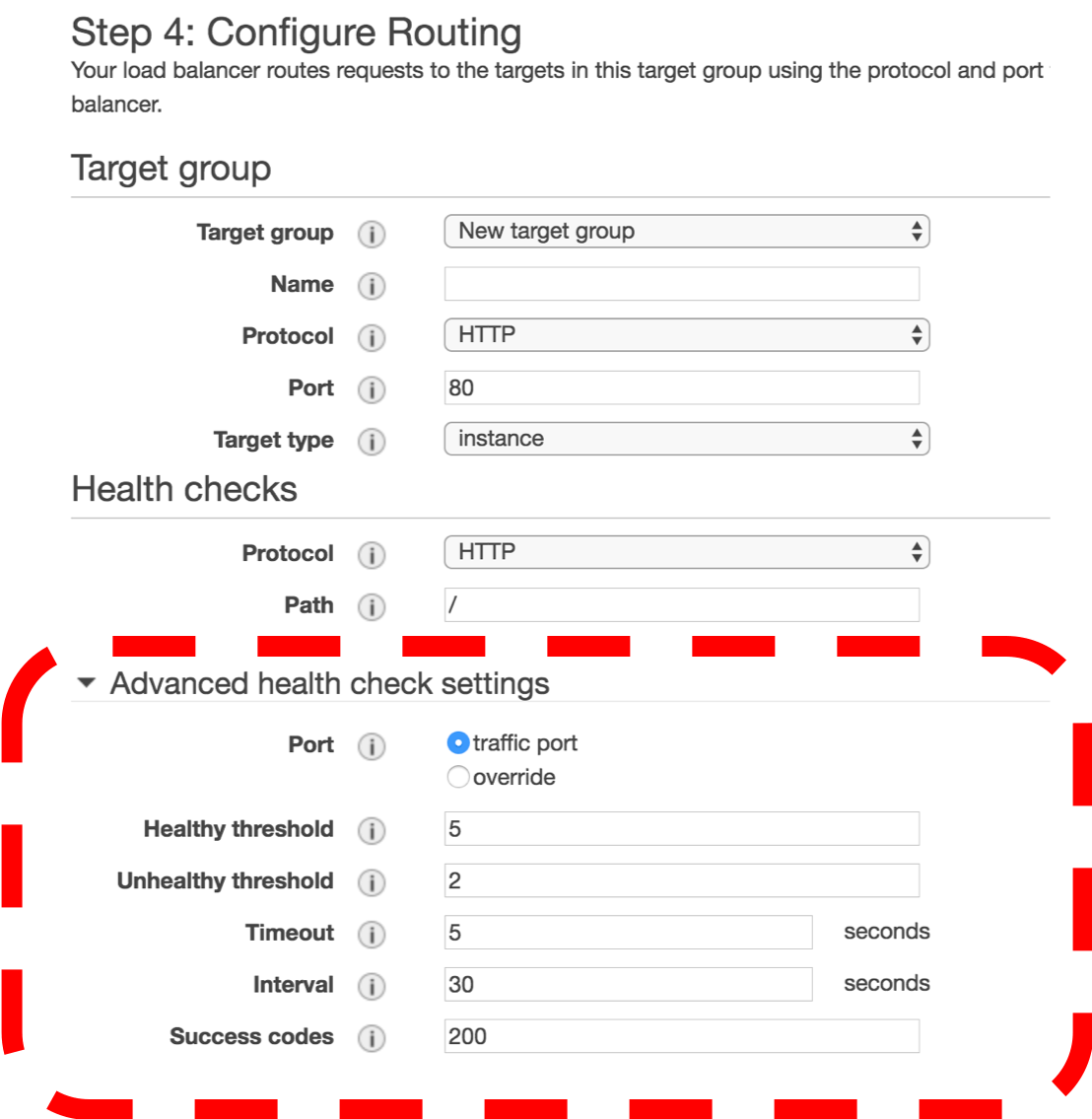

Find below two load balancer health check settings that you can tweak to improve speed. Let’s look at the default settings first, and then we will suggest how we can change these values to reduce latency.

The default settings for target group health checks are:

- HealthCheckIntervalSeconds: 30 seconds

- HealthyThresholdCount: 5

By default, the load balancer requires five passing health checks, each of which is made after an interval of 30 seconds, before the target container will be declared healthy. This is straight 2 minutes and 30 seconds. As Amazon ECS uses the load balancer health check as a mandatory part of determining container health, this means that by default, it will take a minimum of 2 minutes and 30 seconds before ECS considers a freshly launched container to be healthy.

Most modern runtimes are fast enough to get your service up and running in a quick time, so let’s change the above settings as below:

- HealthCheckIntervalSeconds: 5 seconds

- HealthyThresholdCount: 2

The above configuration would mean it only takes 10 seconds before the load balancer and ECS can consider the container healthy.

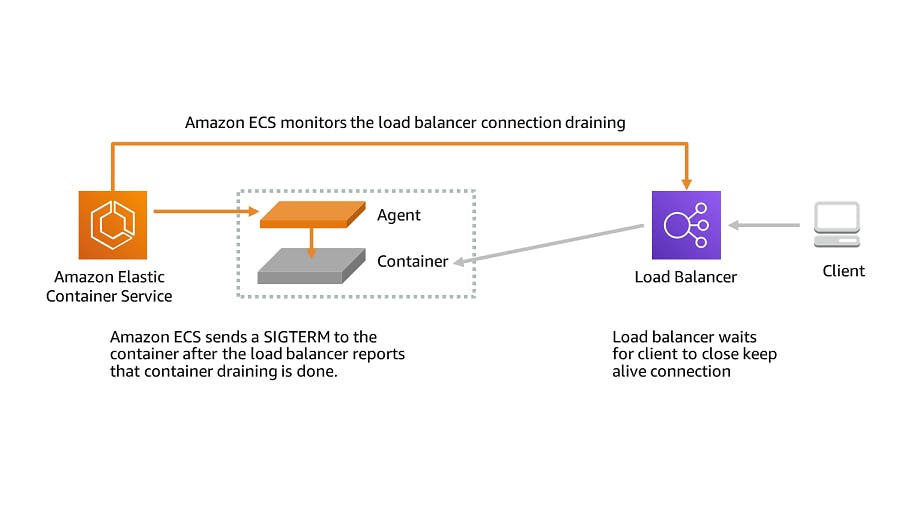

#Load balancer connection draining

Most modern browsers and mobile applications maintain a keep-alive connection to the container service. This enables them to reuse the existing connection for subsequent requests. When you want to stop traffic to a container, you notify the load balancer.

The following diagram describes the load balancer connection draining process. When you tell the load balancer to stop traffic to the container, it periodically checks to see if the client has closed the keep-alive connection or not. The Amazon ECS agent monitors the load balancer and waits for the load balancer to report that the keep-alive connection is closed on its own. It will only break the keep-alive connections forcefully after a period called the deregistration delay.

The default settings for deregistration delay are the following:

- deregistration_delay.timeout_seconds: 300 seconds

By default, the load balancer will wait up to 300 seconds (or 5 minutes) for the existing keep-alive connections to close on their own, before forcefully breaking them. This is a lot of time. ECS waits for this deregistration to complete before sending a SIGTERM signal to your container so that any requests that are still in progress do not get dropped. If you perform long awaiting tasks like image processing or file uploads, you should not change this parameter. However, if your service is like a REST API that responds back in a second or two, then you can take advantage of reducing this parameter value to reduce the latency.

- deregistration_delay.timeout_seconds: 5 seconds

This will ensure that the load balancer will wait only 5 seconds before breaking any keep-alive connections between the client and the backend server. Then it will report to ECS that draining is complete, and ECS can stop the task.

#Look at the container images

Large image size is a pretty common reason for slowness. The larger the image, the slower the deploy since more time is spent pushing and pulling from the registry. Note that Fargate does not cache images, and therefore the whole image needs to be pulled from the registry whenever a task runs. Here are a few tips for the images used in ECS, especially for Fargate tasks:

- Use thinner base images. E.g., a fatter image (full versions of Debian, Ubuntu, etc.) might take longer to start up because there are more services that run in the containers compared to their respective slim versions (Debian-slim, Ubuntu-slim, etc.) or smaller base images (Alpine).

- Limit the data written to the container layer. This tip will help EC2 tasks as well.

With Docker, it’s very easy to bloat your image unknowingly. Every command creates a new layer, and all layers are saved separately. Therefore, if a big file is generated by one command and removed later in the Docker file, it will still add bloat to the size. - Have the repository that stores the image in the same Region as the task.

- Use a larger task size with additional vCPUs. The larger task size can help reduce the time it takes to extract the image when a task is launched.

#Fine-tune your task management

To ensure that there's no application downtime, the usual deployment process is as follows:

- Start the new application containers while keeping the exiting containers running.

- Check that the new containers are healthy.

- Stop the old containers.

Depending on your deployment configuration and the amount of available space in your cluster, it may take multiple rounds to replace all old tasks with new ones completely. There are two ECS service configuration options that you can use to modify the number:

- minimumHealthyPercent: 100% (default)

The lower limit on the number of tasks for your service must remain in the RUNNING state during a deployment. - maximumPercent: 200% (default)

The upper limit on the number of tasks for your service that is allowed in the RUNNING or PENDING state during a deployment

If you use the above-mentioned default values for the options, there is a minimum of 2.5 minutes wait for each new task that starts. Additionally, the load balancer might have to wait 5 minutes for the old task to stop.

You can speed up the deployment by setting the minimumHealthyPercent value to 50%. Use the following values for the ECS service configuration options when your tasks are idle for some time and don't have a high utilization rate.

- minimumHealthyPercent: 50%

- maximumPercent: 200%

#Conclusion

Slowness in Amazon ECS deployments is a common scenario. This article has provided a solution to the most frequent reasons for slow deploys in ECS. ECS works fine for small and simple projects, but as organizations and projects grow, the deployments start to become slow. Another option for container orchestration is Amazon Elastic Kubernetes Service (EKS) which is even more powerful than Amazon ECS. Amazon EKS is an excellent choice for organizations that want to scale their business efficiently. However, managing Amazon EKS is complex and requires a lot of expertise. A modern solution like Qovery helps you take advantage of powerful EKS features without indulging in the above-mentioned technical complexities. It will not only save your time and cost but will simplify the EKS deployments to a great extent. Try Qovery for free!

Your Favorite DevOps Automation Platform

Qovery is a DevOps Automation Platform Helping 200+ Organizations To Ship Faster and Eliminate DevOps Hiring Needs

Try it out now!

Your Favorite DevOps Automation Platform

Qovery is a DevOps Automation Platform Helping 200+ Organizations To Ship Faster and Eliminate DevOps Hiring Needs

Try it out now!

.jpg?ixlib=gatsbyFP&auto=compress%2Cformat&fit=max)

.jpg?ixlib=gatsbyFP&auto=compress%2Cformat&fit=max)

.jpg?ixlib=gatsbyFP&auto=compress%2Cformat&fit=max)